良質なCKADのPDF問題集でCKAD試験問題を試せます

一番最新のLinux Foundation CKAD試験問題集PDF2023年更新

質問 # 12

Refer to Exhibit.

Task:

The pod for the Deployment named nosql in the craytisn namespace fails to start because its container runs out of resources.

Update the nosol Deployment so that the Pod:

1) Request 160M of memory for its Container

2) Limits the memory to half the maximum memory constraint set for the crayfah name space.

正解:

解説:

Solution:

質問 # 13

Exhibit:

Context

A web application requires a specific version of redis to be used as a cache.

Task

Create a pod with the following characteristics, and leave it running when complete:

* The pod must run in the web namespace.

The namespace has already been created

* The name of the pod should be cache

* Use the Ifccncf/redis image with the 3.2 tag

* Expose port 6379

- A. Solution:

- B. Solution:

正解:A

質問 # 14

Refer to Exhibit.

Set Configuration Context:

[student@node-1] $ | kubectl

Config use-context k8s

Context

A web application requires a specific version of redis to be used as a cache.

Task

Create a pod with the following characteristics, and leave it running when complete:

* The pod must run in the web namespace.

The namespace has already been created

* The name of the pod should be cache

* Use the Ifccncf/redis image with the 3.2 tag

* Expose port 6379

正解:

解説:

Solution:

質問 # 15

Context

Task:

The pod for the Deployment named nosql in the craytisn namespace fails to start because its container runs out of resources.

Update the nosol Deployment so that the Pod:

1) Request 160M of memory for its Container

2) Limits the memory to half the maximum memory constraint set for the crayfah name space.

正解:

解説:

Solution:

質問 # 16

Context

Context

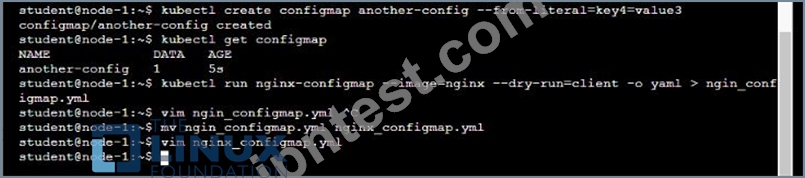

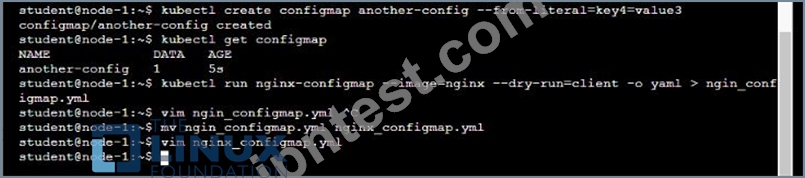

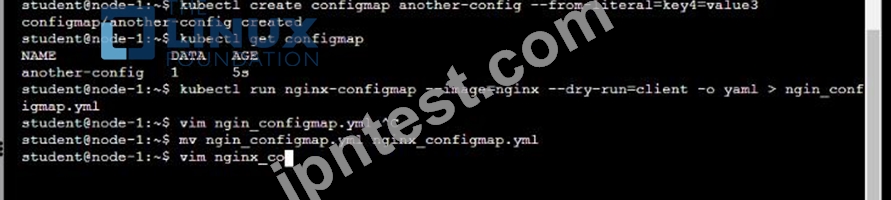

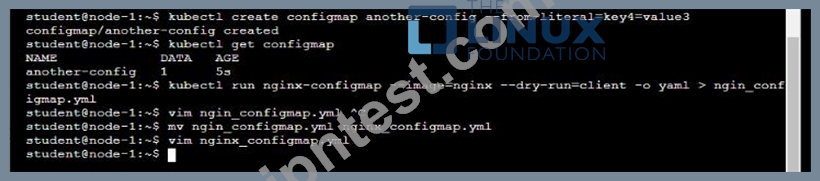

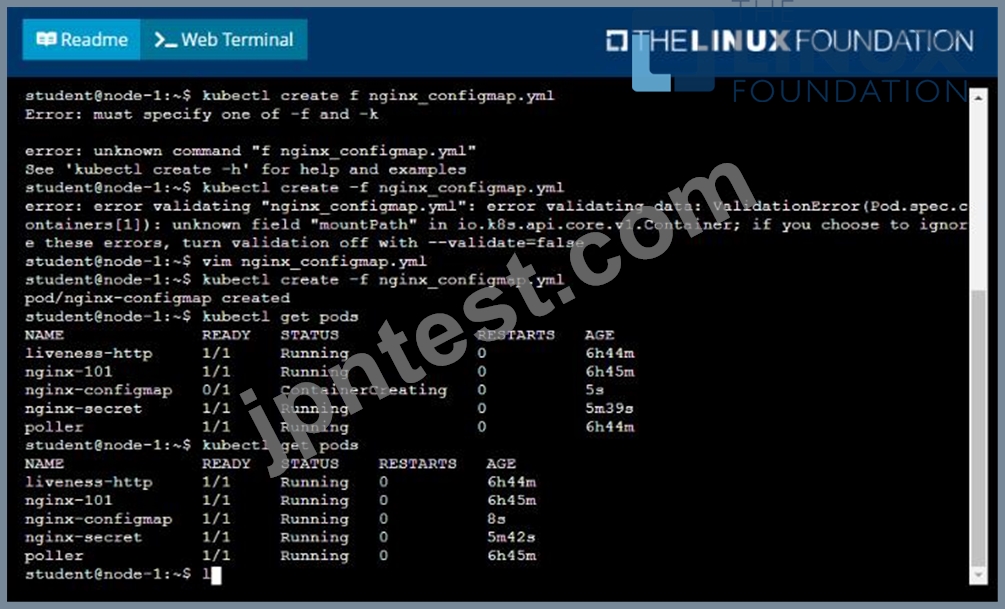

You are tasked to create a ConfigMap and consume the ConfigMap in a pod using a volume mount.

Task

Please complete the following:

* Create a ConfigMap named another-config containing the key/value pair: key4/value3

* start a pod named nginx-configmap containing a single container using the nginx image, and mount the key you just created into the pod under directory /also/a/path

正解:

解説:

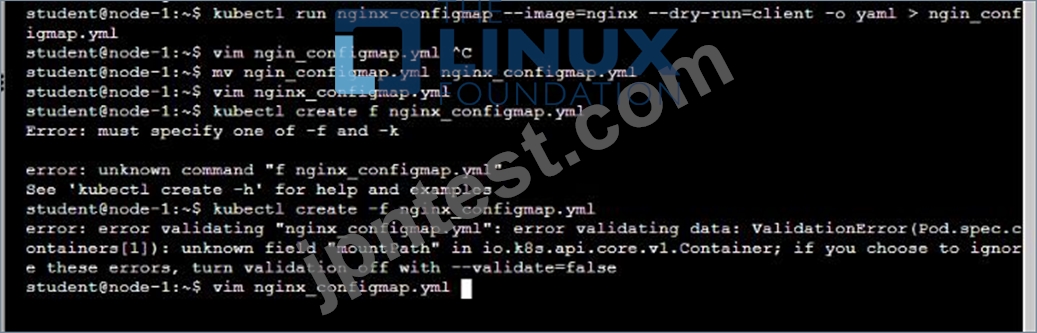

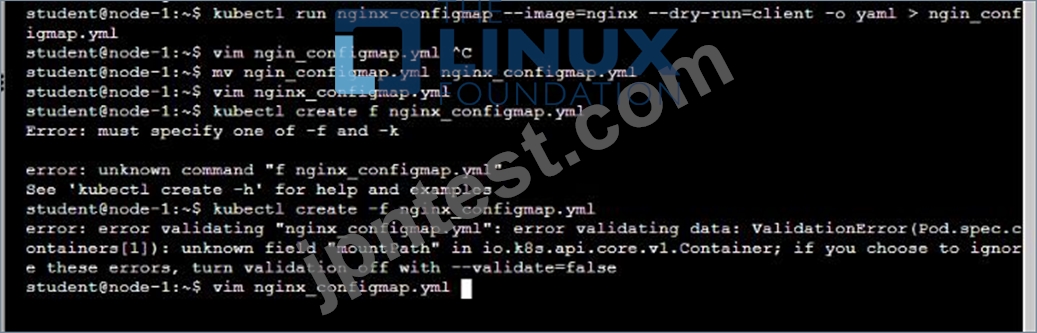

Solution:

質問 # 17

Exhibit:

Context

You are tasked to create a ConfigMap and consume the ConfigMap in a pod using a volume mount.

Task

Please complete the following:

* Create a ConfigMap named another-config containing the key/value pair: key4/value3

* start a pod named nginx-configmap containing a single container using the

nginx image, and mount the key you just created into the pod under directory /also/a/path

- A. Solution:

- B. Solution:

正解:A

質問 # 18

Exhibit:

Task

Create a new deployment for running.nginx with the following parameters;

* Run the deployment in the kdpd00201 namespace. The namespace has already been created

* Name the deployment frontend and configure with 4 replicas

* Configure the pod with a container image of lfccncf/nginx:1.13.7

* Set an environment variable of NGINX__PORT=8080 and also expose that port for the container above

- A. Solution:

- B. Solution:

正解:B

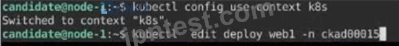

質問 # 19

Task:

1- Update the Propertunel scaling configuration of the Deployment web1 in the ckad00015 namespace setting maxSurge to 2 and maxUnavailable to 59

2- Update the web1 Deployment to use version tag 1.13.7 for the Ifconf/nginx container image.

3- Perform a rollback of the web1 Deployment to its previous version

正解:

解説:

See the solution below.

Explanation

Solution:

Text Description automatically generated

質問 # 20

Exhibit:

Context

A user has reported an aopticauon is unteachable due to a failing livenessProbe .

Task

Perform the following tasks:

* Find the broken pod and store its name and namespace to /opt/KDOB00401/broken.txt in the format:

The output file has already been created

* Store the associated error events to a file /opt/KDOB00401/error.txt, The output file has already been created. You will need to use the -o wide output specifier with your command

* Fix the issue.

- A. Solution:

Create the Pod:

kubectl create -f http://k8s.io/docs/tasks/configure-pod-container/exec-liveness.yaml

Within 30 seconds, view the Pod events:

kubectl describe pod liveness-exec

The output indicates that no liveness probes have failed yet:

FirstSeen LastSeen Count From SubobjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

24s 24s 1 {default-scheduler } Normal Scheduled Successfully assigned liveness-exec to worker0

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "gcr.io/google_containers/busybox"

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Pulled Successfully pulled image "gcr.io/google_containers/busybox"

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Created Created container with docker id 86849c15382e; Security:[seccomp=unconfined]

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Started Started container with docker id 86849c15382e

After 35 seconds, view the Pod events again:

kubectl describe pod liveness-exec

At the bottom of the output, there are messages indicating that the liveness probes have failed, and the containers have been killed and recreated.

FirstSeen LastSeen Count From SubobjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

37s 37s 1 {default-scheduler } Normal Scheduled Successfully assigned liveness-exec to worker0

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "gcr.io/google_containers/busybox"

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulled Successfully pulled image "gcr.io/google_containers/busybox"

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Created Created container with docker id 86849c15382e; Security:[seccomp=unconfined]

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Started Started container with docker id 86849c15382e

2s 2s 1 {kubelet worker0} spec.containers{liveness} Warning Unhealthy Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory

Wait another 30 seconds, and verify that the Container has been restarted:

kubectl get pod liveness-exec

The output shows that RESTARTS has been incremented:

NAME READY STATUS RESTARTS AGE

liveness-exec 1/1 Running 1 m - B. Solution:

Create the Pod:

kubectl create -f http://k8s.io/docs/tasks/configure-pod-container/exec-liveness.yaml

Within 30 seconds, view the Pod events:

kubectl describe pod liveness-exec

The output indicates that no liveness probes have failed yet:

FirstSeen LastSeen Count From SubobjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

24s 24s 1 {default-scheduler } Normal Scheduled Successfully assigned liveness-exec to worker0

23s 23s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "gcr.io/google_containers/busybox"

kubectl describe pod liveness-exec

At the bottom of the output, there are messages indicating that the liveness probes have failed, and the containers have been killed and recreated.

FirstSeen LastSeen Count From SubobjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

37s 37s 1 {default-scheduler } Normal Scheduled Successfully assigned liveness-exec to worker0

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulling pulling image "gcr.io/google_containers/busybox"

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Pulled Successfully pulled image "gcr.io/google_containers/busybox"

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Created Created container with docker id 86849c15382e; Security:[seccomp=unconfined]

36s 36s 1 {kubelet worker0} spec.containers{liveness} Normal Started Started container with docker id 86849c15382e

2s 2s 1 {kubelet worker0} spec.containers{liveness} Warning Unhealthy Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory

Wait another 30 seconds, and verify that the Container has been restarted:

kubectl get pod liveness-exec

The output shows that RESTARTS has been incremented:

NAME READY STATUS RESTARTS AGE

liveness-exec 1/1 Running 1 m

正解:A

質問 # 21

Refer to Exhibit.

Set Configuration Context:

[student@node-1] $ | kubectl

Config use-context k8s

Context

A container within the poller pod is hard-coded to connect the nginxsvc service on port 90 . As this port changes to 5050 an additional container needs to be added to the poller pod which adapts the container to connect to this new port. This should be realized as an ambassador container within the pod.

Task

* Update the nginxsvc service to serve on port 5050.

* Add an HAproxy container named haproxy bound to port 90 to the poller pod and deploy the enhanced pod. Use the image haproxy and inject the configuration located at /opt/KDMC00101/haproxy.cfg, with a ConfigMap named haproxy-config, mounted into the container so that haproxy.cfg is available at /usr/local/etc/haproxy/haproxy.cfg. Ensure that you update the args of the poller container to connect to localhost instead of nginxsvc so that the connection is correctly proxied to the new service endpoint. You must not modify the port of the endpoint in poller's args . The spec file used to create the initial poller pod is available in /opt/KDMC00101/poller.yaml

正解:

解説:

Solution:

To update the nginxsvc service to serve on port 5050, you will need to edit the service's definition yaml file. You can use the kubectl edit command to edit the service in place.

kubectl edit svc nginxsvc

This will open the service definition yaml file in your default editor. Change the targetPort of the service to 5050 and save the file.

To add an HAproxy container named haproxy bound to port 90 to the poller pod, you will need to edit the pod's definition yaml file located at /opt/KDMC00101/poller.yaml.

You can add a new container to the pod's definition yaml file, with the following configuration:

containers:

- name: haproxy

image: haproxy

ports:

- containerPort: 90

volumeMounts:

- name: haproxy-config

mountPath: /usr/local/etc/haproxy/haproxy.cfg

subPath: haproxy.cfg

args: ["haproxy", "-f", "/usr/local/etc/haproxy/haproxy.cfg"]

This will add the HAproxy container to the pod and configure it to listen on port 90. It will also mount the ConfigMap haproxy-config to the container, so that haproxy.cfg is available at /usr/local/etc/haproxy/haproxy.cfg.

To inject the configuration located at /opt/KDMC00101/haproxy.cfg to the container, you will need to create a ConfigMap using the following command:

kubectl create configmap haproxy-config --from-file=/opt/KDMC00101/haproxy.cfg You will also need to update the args of the poller container so that it connects to localhost instead of nginxsvc. You can do this by editing the pod's definition yaml file and changing the args field to args: ["poller","--host=localhost"].

Once you have made these changes, you can deploy the updated pod to the cluster by running the following command:

kubectl apply -f /opt/KDMC00101/poller.yaml

This will deploy the enhanced pod with the HAproxy container to the cluster. The HAproxy container will listen on port 90 and proxy connections to the nginxsvc service on port 5050. The poller container will connect to localhost instead of nginxsvc, so that the connection is correctly proxied to the new service endpoint.

Please note that, this is a basic example and you may need to tweak the haproxy.cfg file and the args based on your use case.

質問 # 22

Refer to Exhibit.

Set Configuration Context:

[student@node-1] $ | kubectl

Config use-context k8s

Context

You sometimes need to observe a pod's logs, and write those logs to a file for further analysis.

Task

Please complete the following;

* Deploy the counter pod to the cluster using the provided YAMLspec file at /opt/KDOB00201/counter.yaml

* Retrieve all currently available application logs from the running pod and store them in the file /opt/KDOB0020l/log_Output.txt, which has already been created

正解:

解説:

Solution:

To deploy the counter pod to the cluster using the provided YAML spec file, you can use the kubectl apply command. The apply command creates and updates resources in a cluster.

kubectl apply -f /opt/KDOB00201/counter.yaml

This command will create the pod in the cluster. You can use the kubectl get pods command to check the status of the pod and ensure that it is running.

kubectl get pods

To retrieve all currently available application logs from the running pod and store them in the file /opt/KDOB0020l/log_Output.txt, you can use the kubectl logs command. The logs command retrieves logs from a container in a pod.

kubectl logs -f <pod-name> > /opt/KDOB0020l/log_Output.txt

Replace <pod-name> with the name of the pod.

You can also use -f option to stream the logs.

kubectl logs -f <pod-name> > /opt/KDOB0020l/log_Output.txt &

This command will retrieve the logs from the pod and write them to the /opt/KDOB0020l/log_Output.txt file.

Please note that the above command will retrieve all logs from the pod, including previous logs. If you want to retrieve only the new logs that are generated after running the command, you can add the --since flag to the kubectl logs command and specify a duration, for example --since=24h for logs generated in the last 24 hours.

Also, please note that, if the pod has multiple containers, you need to specify the container name using -c option.

kubectl logs -f <pod-name> -c <container-name> > /opt/KDOB0020l/log_Output.txt The above command will redirect the logs of the specified container to the file.

質問 # 23

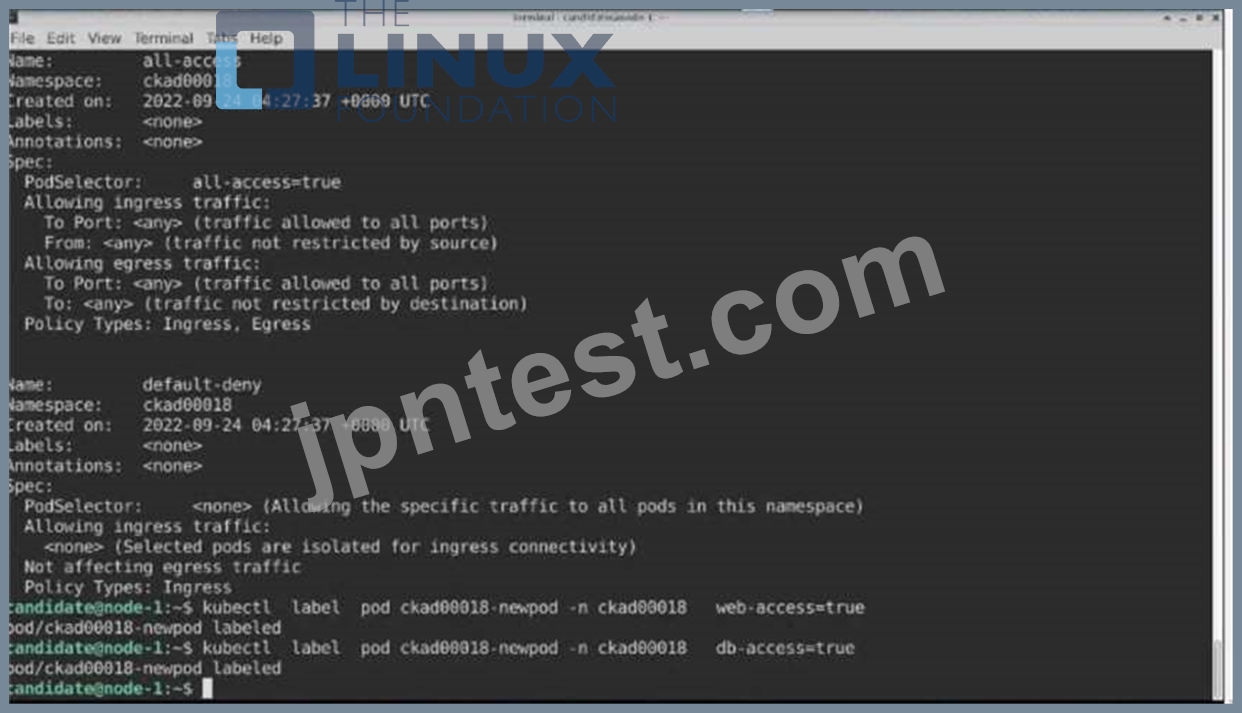

Task:

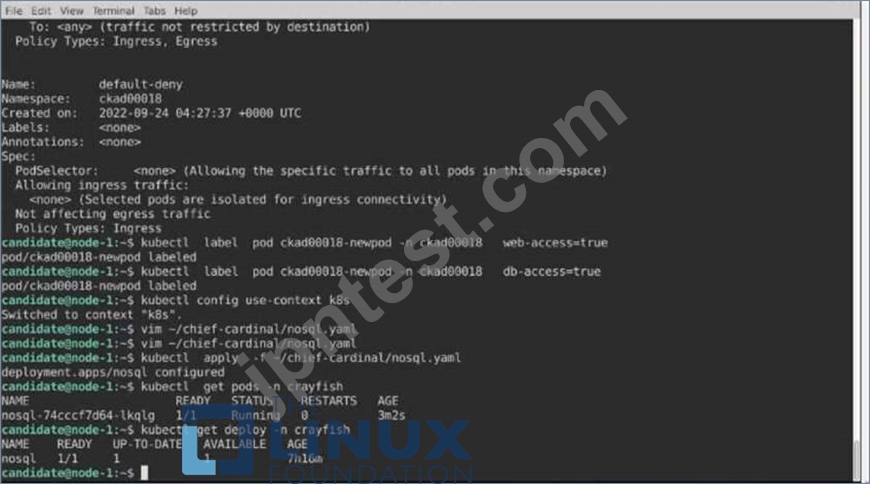

Update the Pod ckad00018-newpod in the ckad00018 namespace to use a NetworkPolicy allowing the Pod to send and receive traffic only to and from the pods web and db

正解:

解説:

See the solution below.

Explanation

Solution:

質問 # 24

Context

A web application requires a specific version of redis to be used as a cache.

Task

Create a pod with the following characteristics, and leave it running when complete:

* The pod must run in the web namespace.

The namespace has already been created

* The name of the pod should be cache

* Use the Ifccncf/redis image with the 3.2 tag

* Expose port 6379

正解:

解説:

Solution:

質問 # 25

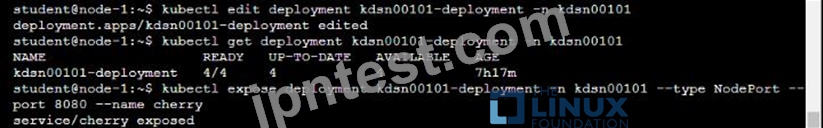

Refer to Exhibit.

Context

You have been tasked with scaling an existing deployment for availability, and creating a service to expose the deployment within your infrastructure.

Task

Start with the deployment named kdsn00101-deployment which has already been deployed to the namespace kdsn00101 . Edit it to:

* Add the func=webFrontEnd key/value label to the pod template metadata to identify the pod for the service definition

* Have 4 replicas

Next, create ana deploy in namespace kdsn00l01 a service that accomplishes the following:

* Exposes the service on TCP port 8080

* is mapped to me pods defined by the specification of kdsn00l01-deployment

* Is of type NodePort

* Has a name of cherry

正解:

解説:

Solution:

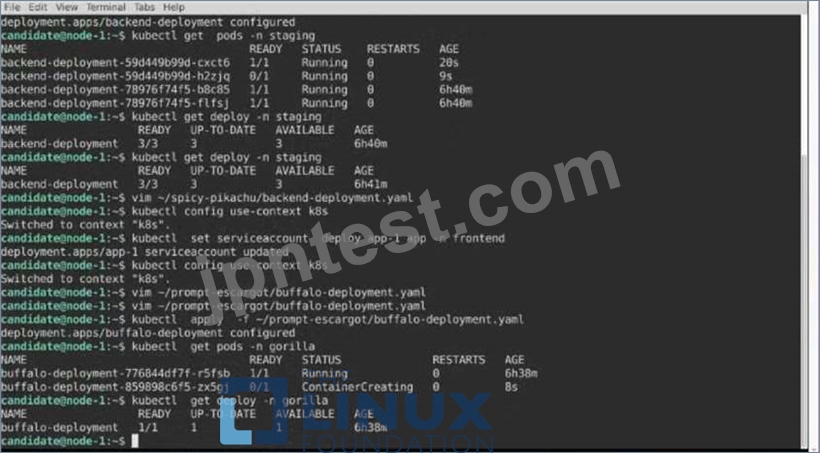

質問 # 26

Context

Task:

A pod within the Deployment named buffale-deployment and in namespace gorilla is logging errors.

1) Look at the logs identify errors messages.

Find errors, including User "system:serviceaccount:gorilla:default" cannot list resource "deployment" [...] in the namespace "gorilla"

2) Update the Deployment buffalo-deployment to resolve the errors in the logs of the Pod.

The buffalo-deployment 'S manifest can be found at -/prompt/escargot/buffalo-deployment.yaml

正解:

解説:

Solution:

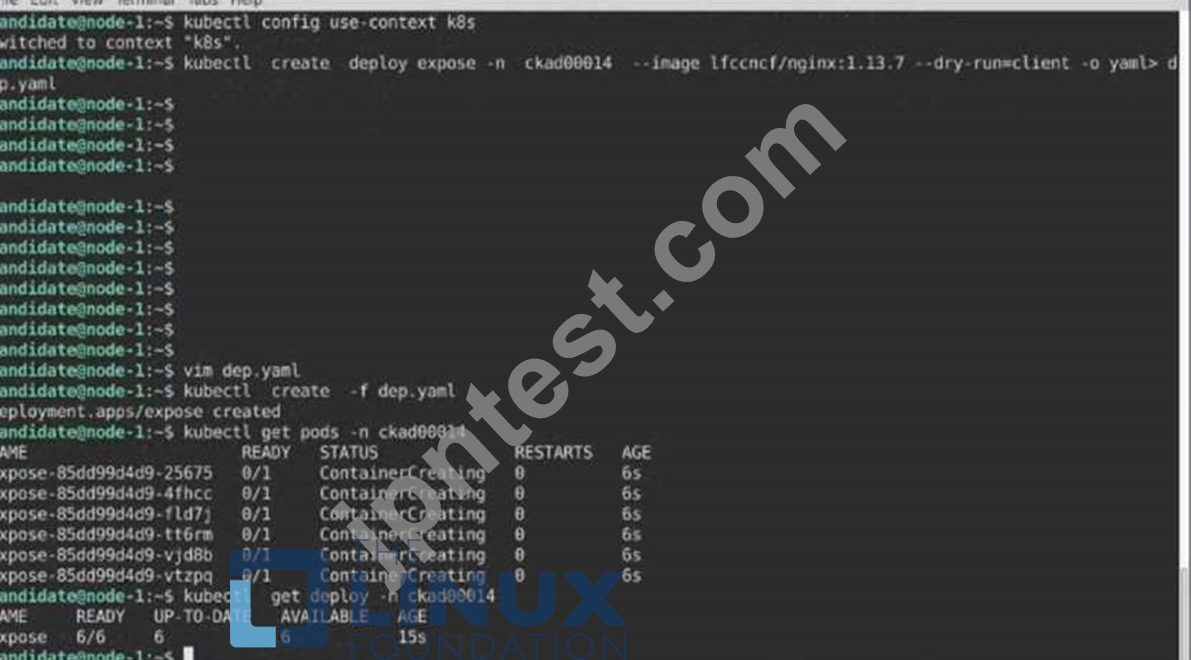

質問 # 27

Context

Task:

Create a Deployment named expose in the existing ckad00014 namespace running 6 replicas of a Pod. Specify a single container using the ifccncf/nginx: 1.13.7 image Add an environment variable named NGINX_PORT with the value 8001 to the container then expose port 8001

正解:

解説:

Solution:

質問 # 28

Refer to Exhibit.

Context

You are tasked to create a ConfigMap and consume the ConfigMap in a pod using a volume mount.

Task

Please complete the following:

* Create a ConfigMap named another-config containing the key/value pair: key4/value3

* start a pod named nginx-configmap containing a single container using the nginx image, and mount the key you just created into the pod under directory /also/a/path

正解:

解説:

Solution:

質問 # 29

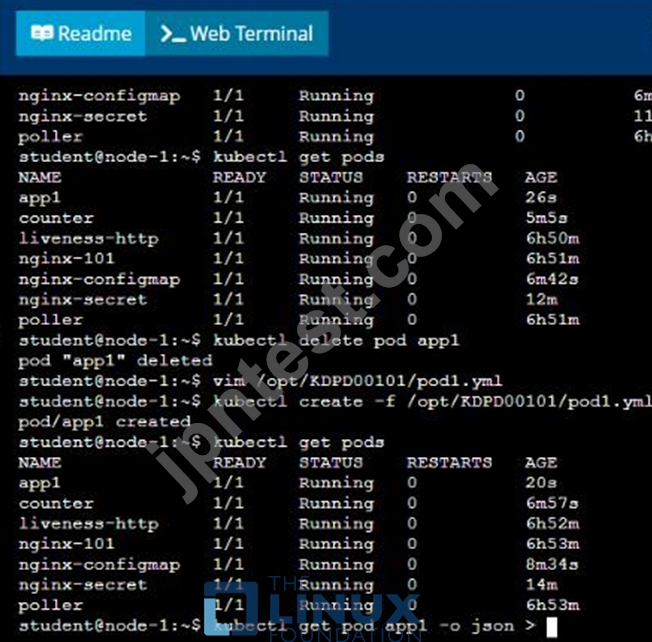

Context

Anytime a team needs to run a container on Kubernetes they will need to define a pod within which to run the container.

Task

Please complete the following:

* Create a YAML formatted pod manifest

/opt/KDPD00101/podl.yml to create a pod named app1 that runs a container named app1cont using image Ifccncf/arg-output

with these command line arguments: -lines 56 -F

* Create the pod with the kubect1 command using the YAML file created in the previous step

* When the pod is running display summary data about the pod in JSON format using the kubect1 command and redirect the output to a file named /opt/KDPD00101/out1.json

* All of the files you need to work with have been created, empty, for your convenience

- A. Solution:

- B. Solution:

正解:B

質問 # 30

Context

Context

Your application's namespace requires a specific service account to be used.

Task

Update the app-a deployment in the production namespace to run as the restrictedservice service account. The service account has already been created.

正解:

解説:

Solution:

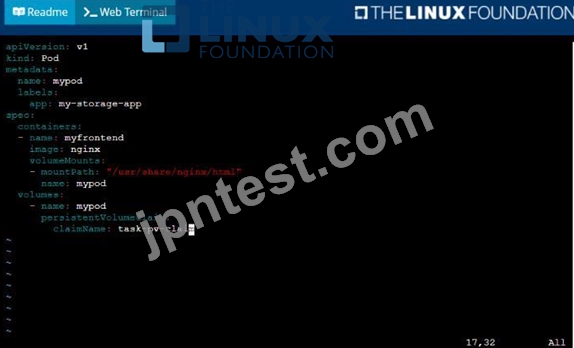

質問 # 31

Context

A project that you are working on has a requirement for persistent data to be available.

Task

To facilitate this, perform the following tasks:

* Create a file on node sk8s-node-0 at /opt/KDSP00101/data/index.html with the content Acct=Finance

* Create a PersistentVolume named task-pv-volume using hostPath and allocate 1Gi to it, specifying that the volume is at /opt/KDSP00101/data on the cluster's node. The configuration should specify the access mode of ReadWriteOnce . It should define the StorageClass name exam for the PersistentVolume , which will be used to bind PersistentVolumeClaim requests to this PersistenetVolume.

* Create a PefsissentVolumeClaim named task-pv-claim that requests a volume of at least 100Mi and specifies an access mode of ReadWriteOnce

* Create a pod that uses the PersistentVolmeClaim as a volume with a label app: my-storage-app mounting the resulting volume to a mountPath /usr/share/nginx/html inside the pod

正解:

解説:

See the solution below.

Explanation

Solution:

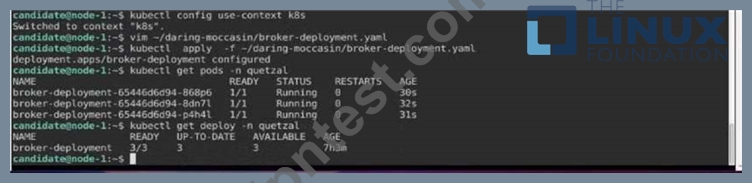

質問 # 32

Refer to Exhibit.

Task:

Modify the existing Deployment named broker-deployment running in namespace quetzal so that its containers.

1) Run with user ID 30000 and

2) Privilege escalation is forbidden

The broker-deployment is manifest file can be found at:

正解:

解説:

Solution:

質問 # 33

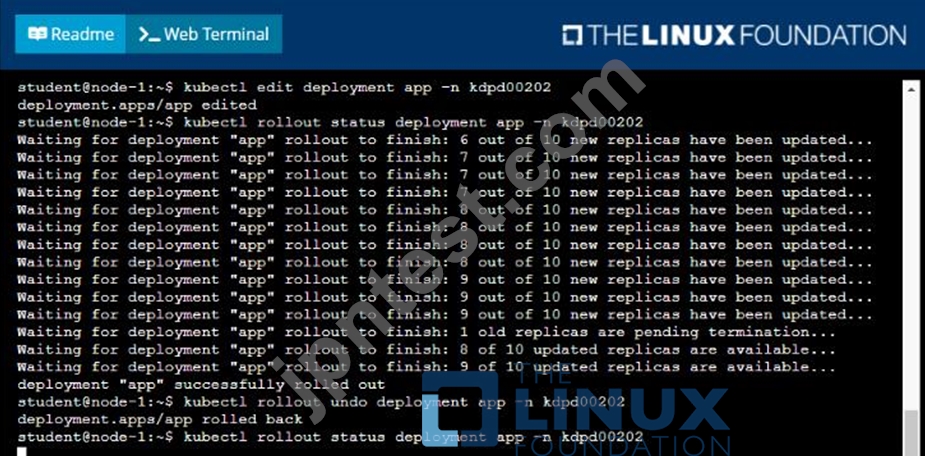

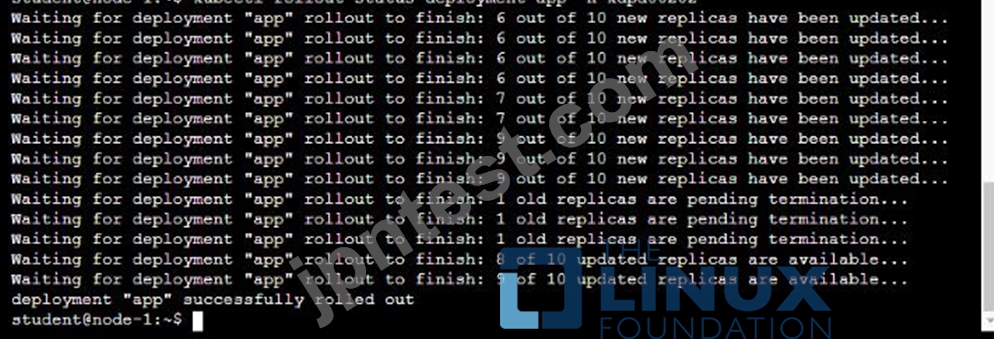

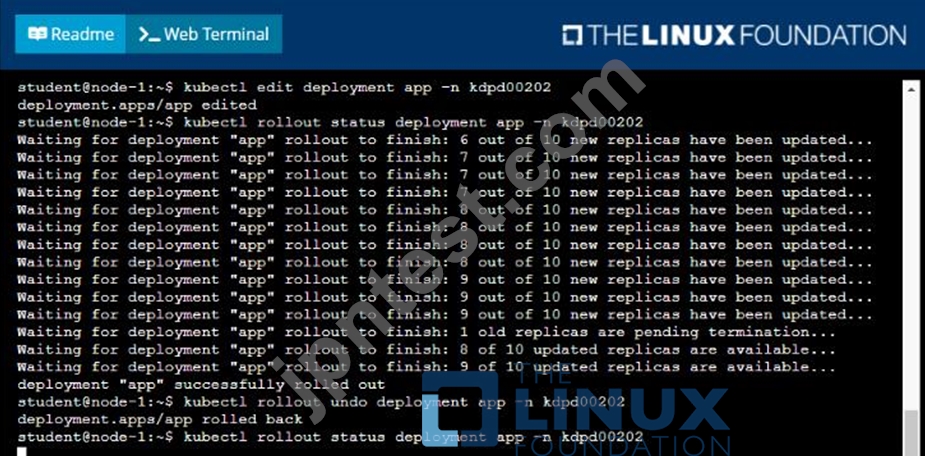

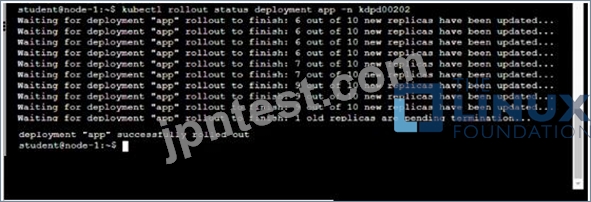

Exhibit:

Context

As a Kubernetes application developer you will often find yourself needing to update a running application.

Task

Please complete the following:

* Update the app deployment in the kdpd00202 namespace with a maxSurge of 5% and a maxUnavailable of 2%

* Perform a rolling update of the web1 deployment, changing the Ifccncf/ngmx image version to 1.13

* Roll back the app deployment to the previous version

- A. Solution:

- B. Solution:

正解:A

質問 # 34

......

100%無料Kubernetes Application Developer CKAD問題集PDFお試しサンプル認定ガイドカバー率:https://www.jpntest.com/shiken/CKAD-mondaishu

PDF試験材料は2023年最新の実際に出るCKAD問題集:https://drive.google.com/open?id=1RUXhUKsEg96SZrk3MweC9v5HvqenGnhJ