[2022年最新] 最高のCKA試験問題集を使って- 実際の試験問題と解答を解こう

テストエンジンを練習してCKAテスト問題

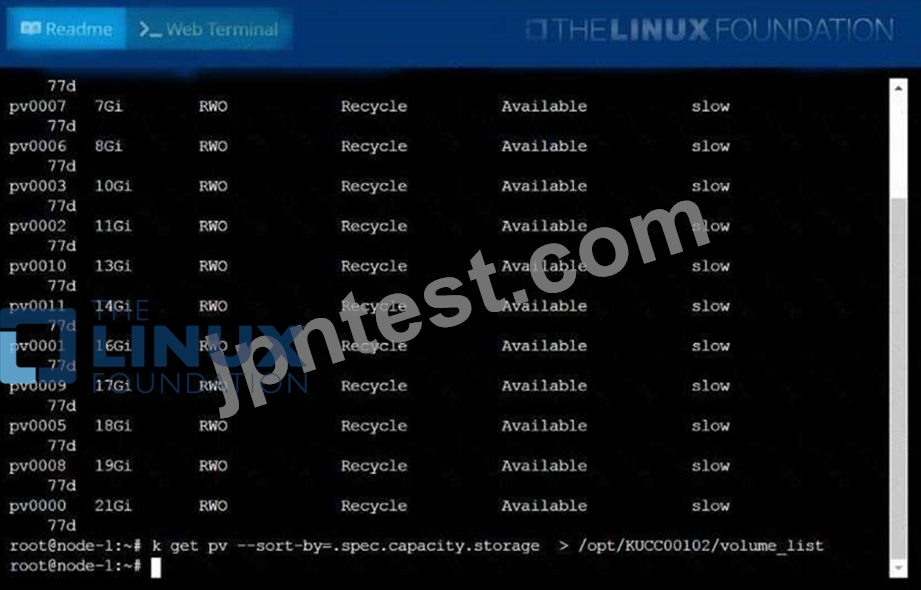

質問 22

List all persistent volumes sorted by capacity, saving the full kubectl output to

/opt/KUCC00102/volume_list. Use kubectl 's own functionality for sorting the output, and do not manipulate it any further.

正解:

解説:

See the solution below.

Explanation

solution

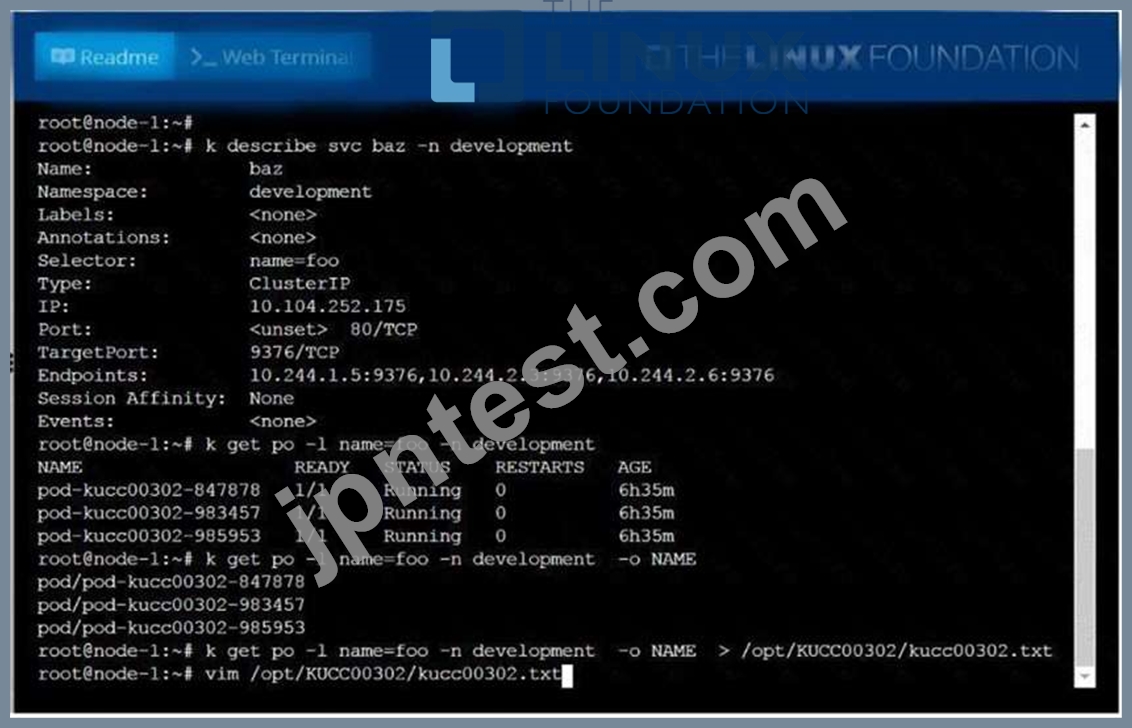

質問 23

Create a file:

/opt/KUCC00302/kucc00302.txtthatlists all pods that implement servicebazin namespacedevelopment.

The format of the file should be onepod name per line.

正解:

解説:

See the solution below.

Explanation

solution

質問 24

Get list of PVs and order by size and write to file "/opt/pvstorage.txt"

正解:

解説:

kubectl get pv --sort-by=.spec.capacity.storage > /opt/pv storage.txt

質問 25

Print pod name and start time to "/opt/pod-status" file

正解:

解説:

See the solution below.

Explanation

kubect1 get pods -o=jsonpath='{range

items[*]}{.metadata.name}{"\t"}{.status.podIP}{"\n"}{end}'

質問 26

Score: 4%

Task

Scale the deployment presentation

正解:

解説:

See the solution below.

Explanation

Solution:

kubectl get deployment

kubectl scale deployment.apps/presentation --replicas=6

質問 27

Create and configure the service front-end-service so it's accessible through NodePort and routes to the existing pod named front-end.

正解:

解説:

See the solution below.

Explanation

solution

質問 28

Create a hostPath PersistentVolume named task-pv-volume with storage 10Gi, access modes ReadWriteOnce, storageClassName manual, and volume at /mnt/data and verify

- A. vim task-pv-volume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: ""

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

kubectl apply -f task-pv-volume.yaml

//Verify

kubectl get pv

NAME CAPACITY ACCESS

MODES RECLAIM POLICY STATUS CLAIM

STORAGECLASS REASON AGE

task-pv-volume 4Gi RWO

Retain Available

8s - B. vim task-pv-volume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: ""

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

kubectl apply -f task-pv-volume.yaml

//Verify

kubectl get pv

NAME CAPACITY ACCESS

MODES RECLAIM POLICY STATUS CLAIM

STORAGECLASS REASON AGE

task-pv-volume 5Gi RWO

Retain Available

3s

正解: B

質問 29

Score: 4%

Context

You have been asked to create a new ClusterRole for a deployment pipeline and bind it to a specific ServiceAccount scoped to a specific namespace.

Task

Create a new ClusterRole named deployment-clusterrole, which only allows to create the following resource types:

* Deployment

* StatefulSet

* DaemonSet

Create a new ServiceAccount named cicd-token in the existing namespace app-team1.

Bind the new ClusterRole deployment-clusterrole lo the new ServiceAccount cicd-token , limited to the namespace app-team1.

正解:

解説:

See the solution below.

Explanation

Solution:

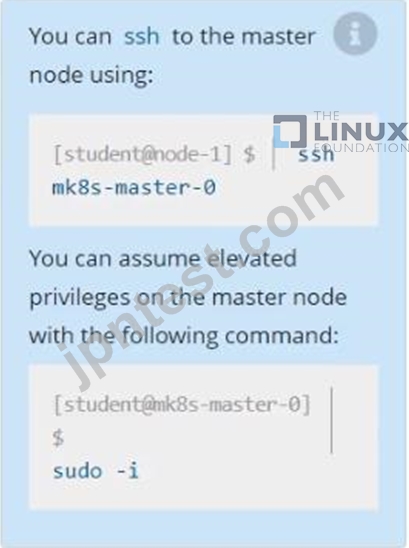

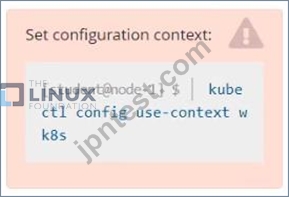

Task should be complete on node -1 master, 2 worker for this connect use command

[student@node-1] > ssh k8s

kubectl create clusterrole deployment-clusterrole --verb=create

--resource=deployments,statefulsets,daemonsets

kubectl create serviceaccount cicd-token --namespace=app-team1

kubectl create rolebinding deployment-clusterrole --clusterrole=deployment-clusterrole

--serviceaccount=default:cicd-token --namespace=app-team1

質問 30

List all the pods that are serviced by the service "webservice" and copy the output in /opt/$USER/webservice.targets Note: You need to list the endpoints

正解:

解説:

kubectl descrive svc webservice | grep -i "Endpoints" > /opt/$USER/webservice.targets kubectl get endpoints webservice > /opt/$USER/webservice.targets

質問 31

Score:7%

Task

Create a new PersistentVolumeClaim

* Name: pv-volume

* Class: csi-hostpath-sc

* Capacity: 10Mi

Create a new Pod which mounts the PersistentVolumeClaim as a volume:

* Name: web-server

* Image: nginx

* Mount path: /usr/share/nginx/html

Configure the new Pod to have ReadWriteOnce

Finally, using kubectl edit or kubectl patch PersistentVolumeClaim to a capacity of 70Mi and record that change.

正解:

解説:

See the solution below.

Explanation

Solution:

vi pvc.yaml

storageclass pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-volume

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 10Mi

storageClassName: csi-hostpath-sc

# vi pod-pvc.yaml

apiVersion: v1

kind: Pod

metadata:

name: web-server

spec:

containers:

- name: web-server

image: nginx

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: my-volume

volumes:

- name: my-volume

persistentVolumeClaim:

claimName: pv-volume

# craete

kubectl create -f pod-pvc.yaml

#edit

kubectl edit pvc pv-volume --record

質問 32

Create an nginx pod and set an env value as 'var1=val1'. Check the env value existence within the pod

- A. kubectl run nginx --image=nginx --restart=Never --env=var1=val1

# then

kubectl exec -it nginx -- env

# or

kubectl exec -it nginx -- sh -c 'echo $var1'

# or

kubectl describe po nginx | grep val1

# or

kubectl run nginx --restart=Never --image=nginx --env=var1=val1

-it --rm - env - B. kubectl run nginx --image=nginx --restart=Never --env=var1=val1

# then

kubectl exec -it nginx -- env

# or

kubectl run nginx --restart=Never --image=nginx --env=var1=val1

-it --rm -- env

正解: A

質問 33

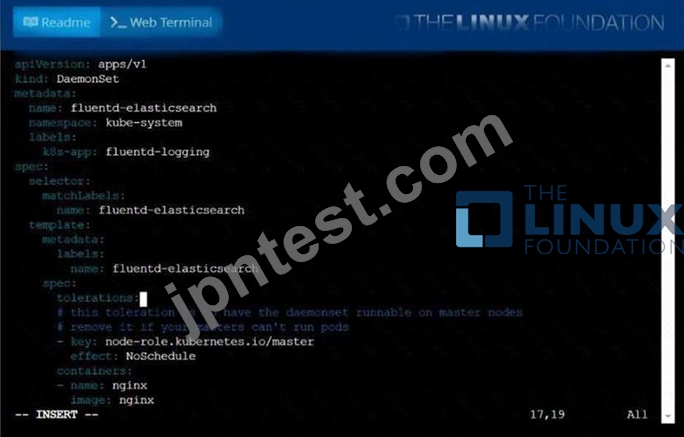

Ensure a single instance of pod nginx is running on each node of the Kubernetes cluster where nginx also represents the Image name which has to be used. Do not override any taints currently in place.

Use DaemonSet to complete this task and use ds-kusc00201 as DaemonSet name.

正解:

解説:

See the solution below.

Explanation

solution

質問 34

Create a Cronjob with busybox image that prints date and hello from kubernetes cluster message for every minute

- A. CronJob Syntax:

* --> Minute

* --> Hours

* --> Day of The Month

* --> Month

* --> Day of the Week

*/1 * * * * --> Execute a command every one minutes.

vim date-job.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: date-job

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

kubectl apply -f date-job.yaml

//Verify

kubectl get cj date-job -o yaml - B. CronJob Syntax:

* --> Minute

* --> Hours

* --> Day of The Month

* --> Month

* --> Day of the Week

*/1 * * * * --> Execute a command every one minutes.

vim date-job.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: date-job

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

kubectl apply -f date-job.yaml

//Verify

kubectl get cj date-job -o yaml

正解: A

質問 35

Create a Pod with main container busybox and which executes this

"while true; do echo 'Hi I am from Main container' >>

/var/log/index.html; sleep 5; done" and with sidecar container

with nginx image which exposes on port 80. Use emptyDir Volume

and mount this volume on path /var/log for busybox and on path

/usr/share/nginx/html for nginx container. Verify both containers

are running.

- A. // create an initial yaml file with this

kubectl run multi-cont-pod --image=busbox --restart=Never --

dry-run -o yaml > multi-container.yaml

// edit the yml as below and create it

kubectl create -f multi-container.yaml

vim multi-container.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: multi-cont-pod

name: multi-cont-pod

spec:

volumes:

- image: busybox

command: ["/bin/sh"]

args: ["-c", "while true; do echo 'Hi I am from Main

container' >> /var/log/index.html; sleep 5;done"]

name: main-container

volumeMounts:

- name: var-logs

mountPath: /var/log

- image: nginx

name: sidecar-container

ports:

mountPath: /usr/share/nginx/html

restartPolicy: Never

// Create Pod

kubectl apply -f multi-container.yaml

//Verify

kubectl get pods - B. // create an initial yaml file with this

kubectl run multi-cont-pod --image=busbox --restart=Never --

dry-run -o yaml > multi-container.yaml

// edit the yml as below and create it

kubectl create -f multi-container.yaml

vim multi-container.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: multi-cont-pod

name: multi-cont-pod

spec:

volumes:

- name: var-logs

emptyDir: {}

containers:

- image: busybox

command: ["/bin/sh"]

args: ["-c", "while true; do echo 'Hi I am from Main

container' >> /var/log/index.html; sleep 5;done"]

name: main-container

volumeMounts:

- name: var-logs

mountPath: /var/log

- image: nginx

name: sidecar-container

ports:

- containerPort: 80

volumeMounts:

- name: var-logs

mountPath: /usr/share/nginx/html

restartPolicy: Never

// Create Pod

kubectl apply -f multi-container.yaml

//Verify

kubectl get pods

正解: B

質問 36

Scale down the deployment to 1 replica

正解:

解説:

kubectl scale deployment webapp -replicas=1 //Verify kubectl get deploy kubectl get po,rs

質問 37

Delete persistent volume and persistent volume claim

正解:

解説:

kubectl delete pvc task-pv-claim kubectl delete pv task-pv-volume // Verify Kubectl get pv,pvc

質問 38

Scale the deployment webserver to

正解:

解説:

See the solution below.

Explanation

solution

F:\Work\Data Entry Work\Data Entry\20200827\CKA\14 B.JPG

質問 39

List pod logs named "frontend" and search for the pattern "started" and write it to a file "/opt/error-logs"

正解:

解説:

Kubectl logs frontend | grep -i "started" > /opt/error-logs

質問 40

For this item, you will havetosshto the nodesik8s-master-0andik8s-node-0and complete all tasks on thesenodes. Ensure that you return tothe base node (hostname:node-1) when you havecompleted this item.

Context

As an administrator of a smalldevelopment team, you have beenasked to set up a Kubernetes clusterto test the viability of a newapplication.

Task

You must usekubeadmto performthis task. Anykubeadminvocationswill require the use of the

--ignore-preflight-errors=alloption.

* Configure thenodeik8s-master-Oas a masternode. .

* Join the nodeik8s-node-otothe cluster.

正解:

解説:

See the solution below.

Explanation

solution

You must use thekubeadmconfiguration file located at when initializingyour cluster.

You may use any CNI pluginto complete this task, but ifyou don't have your favouriteCNI plugin's manifest URL athand, Calico is one popularoption:https://docs.projectcalico.org/v3.14/manifests/calico.yaml Docker is already installedon both nodes and hasbeen configured so that you caninstall the required tools.

質問 41

For this item, you will have to ssh and complete all tasks on these

nodes. Ensure that you return to the base node (hostname: ) when you have completed this item.

Context

As an administrator of a small development team, you have been asked to set up a Kubernetes cluster to test the viability of a new application.

Task

You must use kubeadm to perform this task. Any kubeadm invocations will require the use of the

--ignore-preflight-errors=all option.

Configure the node ik8s-master-O as a master node. .

Join the node ik8s-node-o to the cluster.

正解:

解説:

See the solution below.

Explanation

solution

You must use the kubeadm configuration file located at /etc/kubeadm.conf when initializingyour cluster.

You may use any CNI plugin to complete this task, but if you don't have your favourite CNI plugin's manifest URL at hand, Calico is one popular option:

https://docs.projectcalico.org/v3.14/manifests/calico.yaml

Docker is already installed on both nodes and apt has been configured so that you can install the required tools.

質問 42

Score: 7%

Task

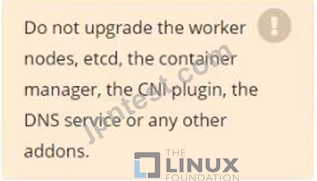

Given an existing Kubernetes cluster running version 1.20.0, upgrade all of the Kubernetes control plane and node components on the master node only to version 1.20.1.

Be sure to drain the master node before upgrading it and uncordon it after the upgrade.

You are also expected to upgrade kubelet and kubectl on the master node.

正解:

解説:

See the solution below.

Explanation

SOLUTION:

[student@node-1] > ssh ek8s

kubectl cordon k8s-master

kubectl drain k8s-master --delete-local-data --ignore-daemonsets --force apt-get install kubeadm=1.20.1-00 kubelet=1.20.1-00 kubectl=1.20.1-00 --disableexcludes=kubernetes kubeadm upgrade apply 1.20.1 --etcd-upgrade=false systemctl daemon-reload systemctl restart kubelet kubectl uncordon k8s-master

質問 43

Create and configure the servicefront-end-serviceso it's accessiblethroughNodePortand routes to theexisting pod namedfront-end.

正解:

解説:

See the solution below.

Explanation

solution

質問 44

Check logs of each container that "busyboxpod-{1,2,3}"

- A. kubectl logs busybox -c busybox-container-1

kubectl logs busybox -c busybox-container-3

kubectl logs busybox -c busybox-container-3 - B. kubectl logs busybox -c busybox-container-1

kubectl logs busybox -c busybox-container-2

kubectl logs busybox -c busybox-container-3

正解: B

質問 45

Score: 13%

Task

A Kubernetes worker node, named wk8s-node-0 is in state NotReady. Investigate why this is the case, and perform any appropriate steps to bring the node to a Ready state, ensuring that any changes are made permanent.

正解:

解説:

See the solution below.

Explanation

Solution:

sudo -i

systemctl status kubelet

systemctl start kubelet

systemctl enable kubelet

質問 46

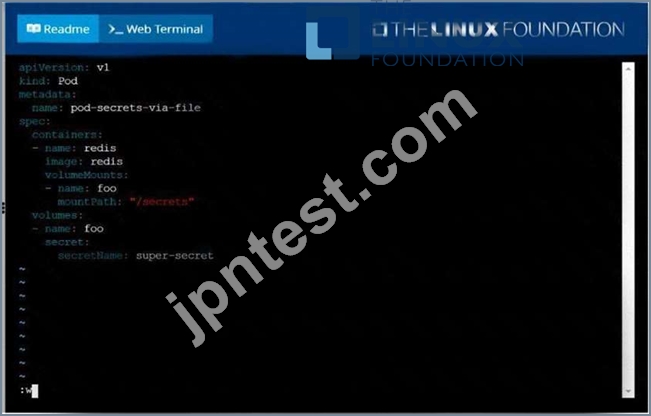

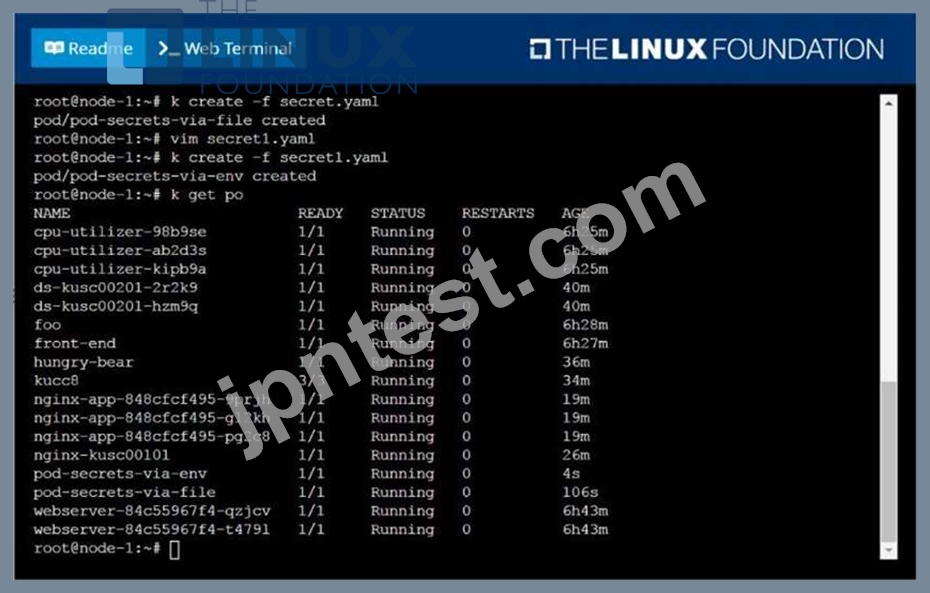

Create a Kubernetes secret as follows:

Name: super-secret

password: bob

Create a pod named pod-secrets-via-file, using the redis Image, which mounts a secret named super-secret at

/secrets.

Create a second pod named pod-secrets-via-env, using the redis Image, which exports password as CONFIDENTIAL

正解:

解説:

See the solution below.

Explanation

solution

F:\Work\Data Entry Work\Data Entry\20200827\CKA\12 B.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\12 C.JPG

F:\Work\Data Entry Work\Data Entry\20200827\CKA\12 D.JPG

質問 47

......

Linux Foundation CKA 認定試験の出題範囲:

| トピック | 出題範囲 |

|---|---|

| トピック 1 |

|

| トピック 2 |

|

| トピック 3 |

|

| トピック 4 |

|

| トピック 5 |

|

| トピック 6 |

|

CKA実際の問題アンサーPDFには100%カバー率リアルな試験問題:https://www.jpntest.com/shiken/CKA-mondaishu